Deep Retinex Decomposition for Low-Light Enhancement

BMVC Oral Presentation, 2018 | arXiv

Paper | Supplementary | PPT | Poster

* indicates equal contributions.

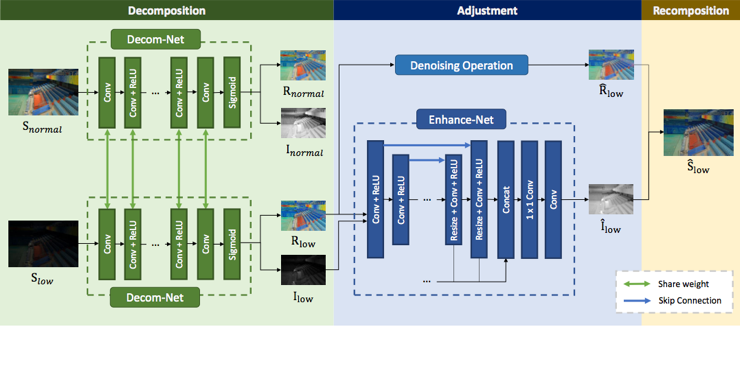

Figure 1: The proposed framework for Retinex-Net. The enhancement process is divided into three steps: decomposition, adjustment and reconstruction. In the decomposition step, a subnetwork Decom-Net decomposes the input image into reflectance and illumination. In the following adjustment step, an encoder-decoder based Enhance-Net brightens up the illumination. Multi-scale concatenation is introduced to adjust the illumination from multi-scale perspectives. Noise on the reflectance is also removed at this step. Finally, we reconstruct the adjusted illumination and reflectance to get the enhanced result.

Abstract

Retinex model is an effective tool for low-light image enhancement. It assumes that observed images can be decomposed into the reflectance and illumination. Most existing Retinex-based methods have carefully designed hand-crafted constraints and parameters for this highly ill-posed decomposition, which may be limited by model capacity when applied in various scenes. In this paper, we collect a LOw-Light dataset (LOL) containing low/normal-light image pairs and propose a deep Retinex-Net learned on this dataset, including a Decom-Net for decomposition and an Enhance-Net for illumination adjustment. In the training process for Decom-Net, there is no ground truth of decomposed reflectance and illumination. The network is learned with only key constraints including the consistent reflectance shared by paired low/normal-light images, and the smoothness of illumination. Based on the decomposition, subsequent lightness enhancement is conducted on illumination by an enhancement network called Enhance-Net, and for joint denoising there is a denoising operation on reflectance. The Retinex-Net is end-to-end trainable, so that the learned decomposition is by nature good for lightness adjustment. Extensive experiments demonstrate that our method not only achieves visually pleas- ing quality for low-light enhancement but also provides a good representation of image decomposition.

Subjective Results

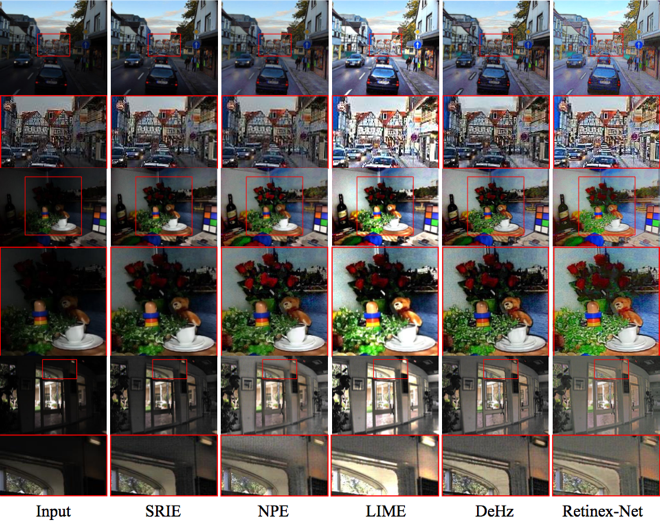

We compare our Retinex-Net with four state-of-the-art methods, including de-hazing based method (DeHz) [1], naturalness preserved enhancement algorithm (NPE) [2], simultaneous reflectance and illumination estimation algorithm (SRIE) [3], and illumination map estimation based (LIME) [4]. Fig. 2 shows visual comparison on three natural images.

Figure 2: The results using different methods on natural images: (top-to-bottom) Street from LIME dataset [4], Still lives from LIME dataset, and Room from MEF dataset [5].

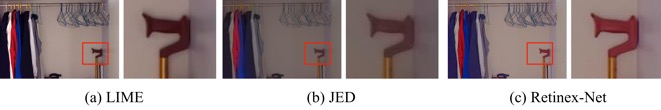

We compare our joint-denoising Retinex-Net with two methods, one is LIME with denoising post-processing, the other is JED [6], a recent joint low-light enhancement and denoising method. Fig. 3 shows visual comparison on three natural images.

Figure 3: The joint denoising results using different methods on Wardrobe in LOL Dataset.

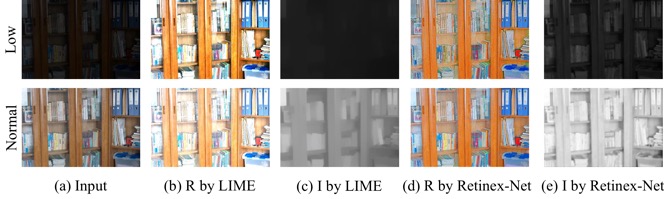

In Fig. 4 we illustrate a low/normal-light image pairs in the evaluation set of our LOL dataset, as well as the reflectance and illumination map decomposed by Decom-Net and LIME.

Figure 4: The decomposition results using our Decom-Net and LIME on Bookshelf in LOL dataset. In our results, the reflectance of the low-light image resembles the reflectance of the normal-light image except for the amplified noise in dark regions occurred in real scenes.

See supplementary for more results.

Download Links

- • Datasets

LOw Light paired dataset (LOL): Google Drive, Baidu Pan (Code:acp3)

Synthetic Image Pairs from Raw Images: Google Drive, Baidu Pan

Testing Images: Google Drive, Baidu Pan

- • Codes

Citation

@inproceedings{Chen2018Retinex,

title={Deep Retinex Decomposition for Low-Light Enhancement},

author={Chen Wei, Wenjing Wang, Wenhan Yang, Jiaying Liu},

booktitle={British Machine Vision Conference},

year={2018},

}

Reference

[1] Xuan Dong, Guan Wang, Yi Pang, Weixin Li, Jiangtao Wen, Wei Meng, and Yao Lu. Fast efficient algorithm for enhancement of low lighting video. In IEEE International Conference on Multimedia and Expo, pages 1–6, 2011.

[2] Shuhang Wang, Jin Zheng, Hai Miao Hu, and Bo Li. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Transactions on Image Processing, 22(9):3538–48, 2013.

[3] Xueyang Fu, Delu Zeng, Yue Huang, Xiaoping Zhang, and Xinghao Ding. Aweighted variational model for simultaneous reflectance and illumination estimation. In Computer Vision and Pattern Recognition, pages 2782–2790, 2016.

[4] Xiaojie Guo, Yu Li, and Haibin Ling. Lime: Low-light image enhancement via illumination map estimation. IEEE Transactions on Image Processing, 26(2):982–993, 2017.

[5] Keda Ma, Kai Zeng, and Zhou Wang. Perceptual quality assessment for multi-exposure image fusion. IEEE Transactions on Image Processing, 24(11):3345, 2015.

[6] Xutong Ren, Mading Li, Wen-Huang Cheng, and Jiaying Liu. Joint enhancement and denoising method via sequential decomposition. In Circuits and Systems (ISCAS), 2018 IEEE International Symposium on, pages 1–5. IEEE, 2018.