Abstract

In recent years, neural style transfer has attracted more and more attention, especially for image style transfer. However, temporally consistent style transfer for videos is still a challenging problem. Existing methods, either relying on a significant amount of video data with optical flows or using single-frame regularizers, fail to handle strong motions or complex variations, therefore have limited performance on real videos. We first identify the cause of the conflict between style transfer and temporal consistency, and propose to reconcile this contradiction by relaxing the objective function, so as to make the stylization loss term more robust to motions. Through relaxation, style transfer is more robust to inter-frame variation without degrading the subjective effect. Based on relaxation and regularization, we design a zero-shot video style transfer framework. Moreover, for better feature migration, we introduce a new module to dynamically adjust inter-channel distributions. Quantitative and qualitative results demonstrate the superiority of our method over state-of-the-art style transfer methods.

Consistent Video Style Transfer

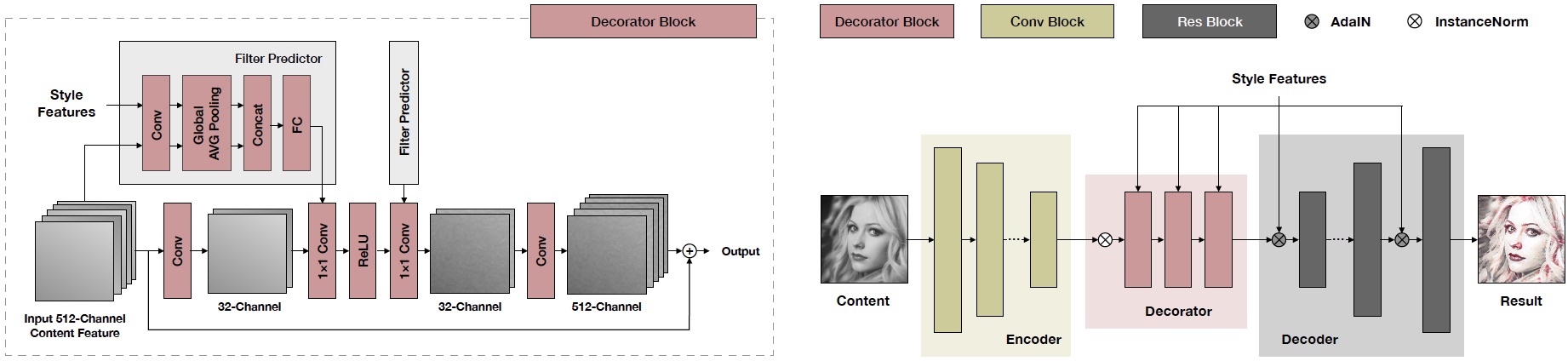

Figure 1. Left: the proposed decorator block for inter-channel feature adjustment. Both target style features and input content features are fed into a shallow sub-network Filter Predictor to predict filters. Residual learning and dimensionality reduction are used to improve the efficiency. Right: The overall architecture of the proposed encoder-decoder style transfer network.

Resources

- Paper: IEEE Xplore

- Code: Github

- Supplementary (PDF+Video): Google Drive, Baidu Pan [397w]

Citation

@article{ReReVST2020,

author = {Wang, Wenjing and Yang, Shuai and Xu, Jizheng and Liu, Jiaying},

title = {Consistent Video Style Transfer via Relaxation and Regularization},

journal = {{IEEE} Trans. Image Process.},

year = {2020}

}

Selected Results

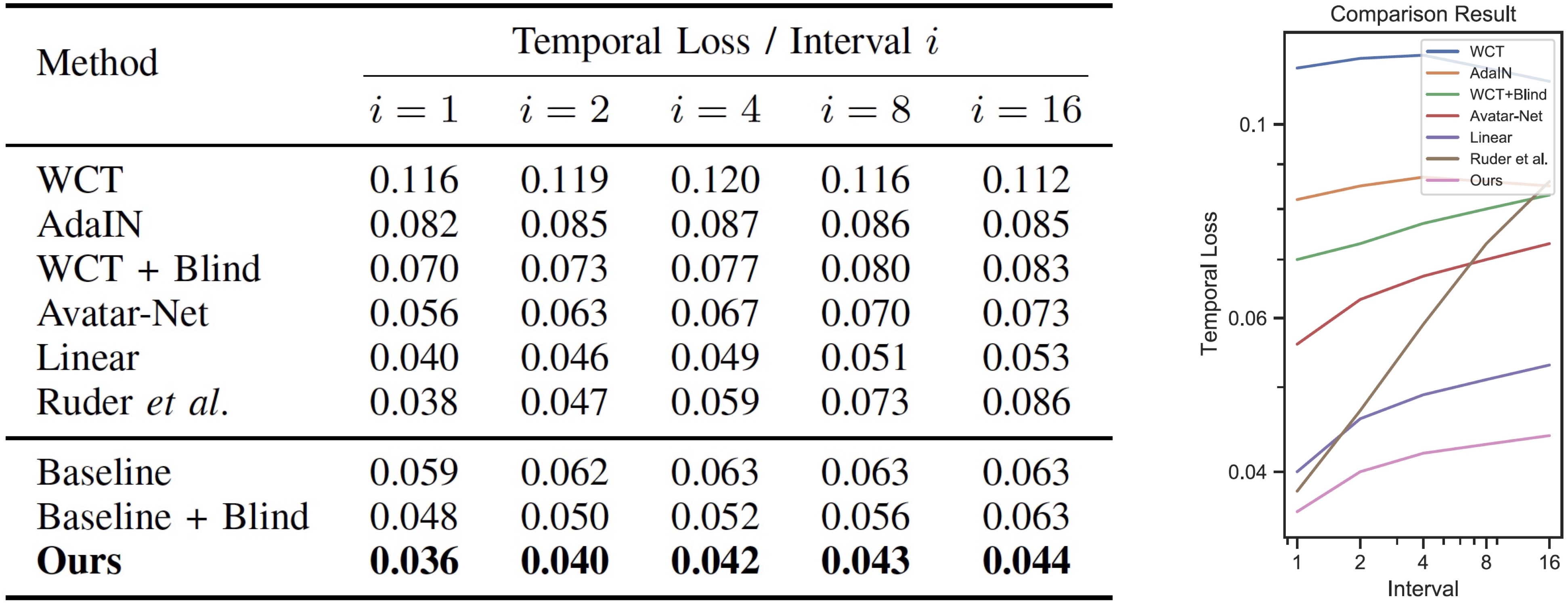

Figure 2. Quantitative evaluation of temporal consistency. Our model yields the lowest temporal loss for all temporal length.

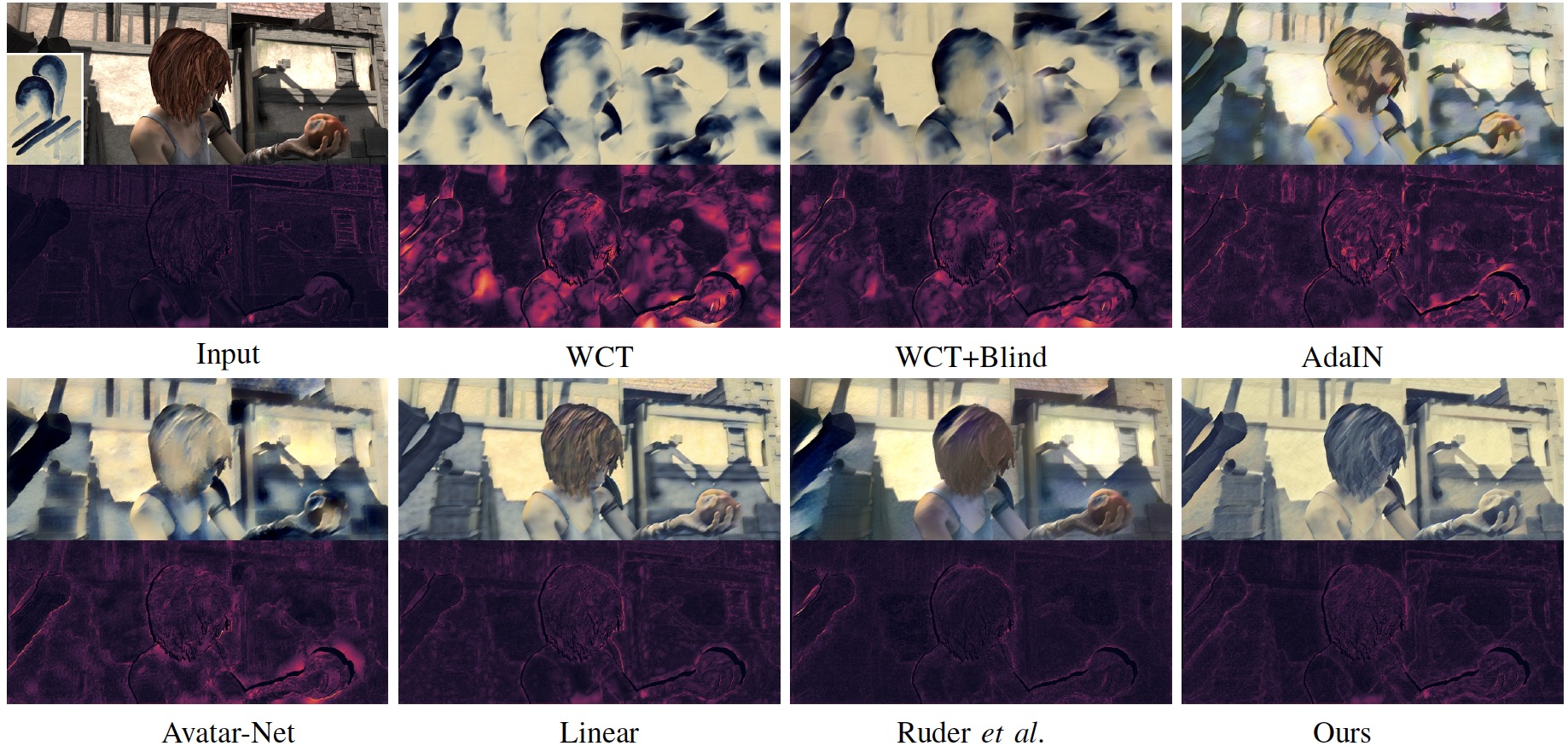

Figure 3. Comparisons on video style transfer. The bottom of each row shows the temporal error heat map. Our method can best balance stylization and maintaining temporal consistency.

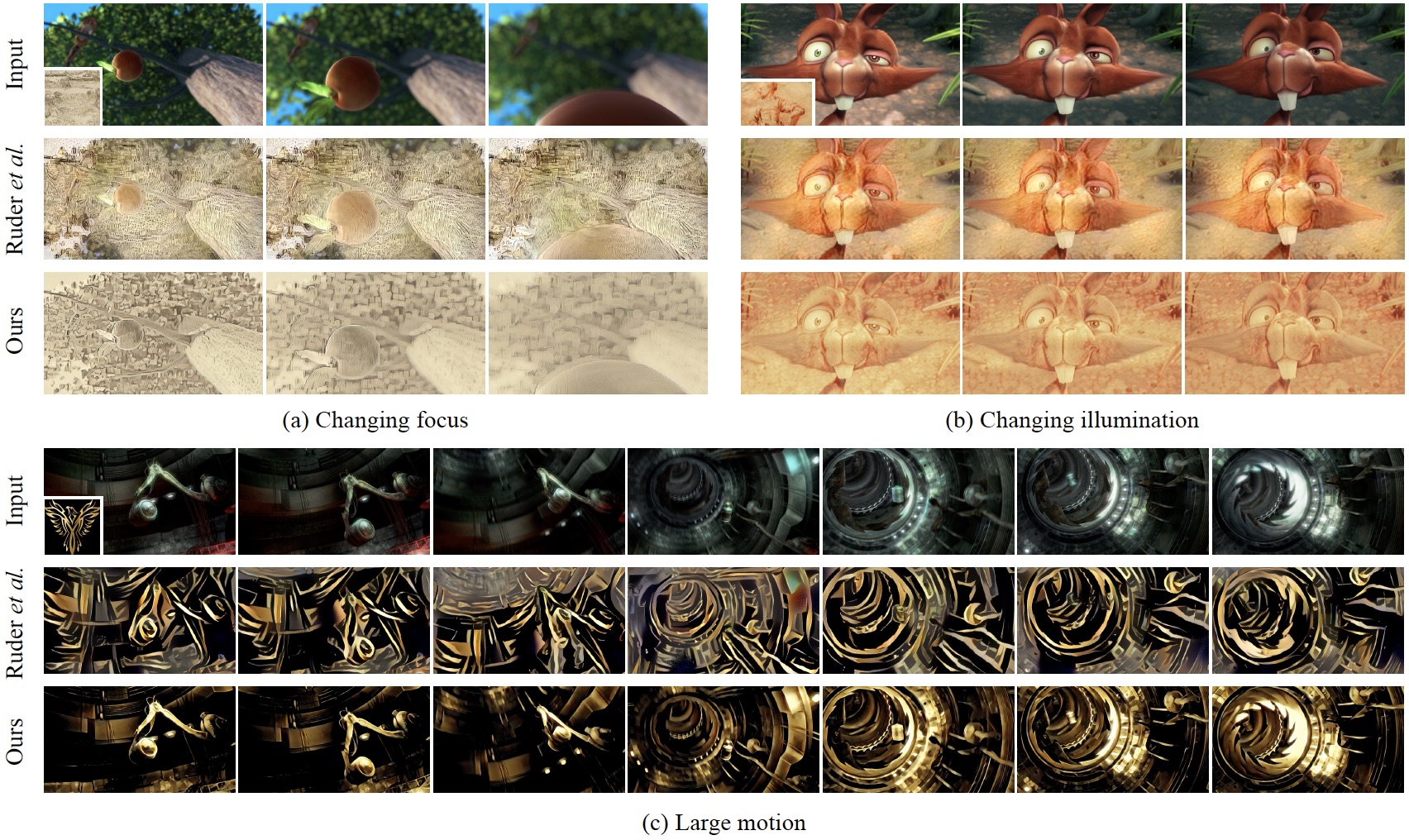

Figure 4. More comparison results on special cases of (a) changing focus, (b) changing illumination, (c) large motion. Compared with Ruder et al. [1], the result of our model fully reflects the content of the input video. Besides, the texture and color of our result are more consistent with the input style image.

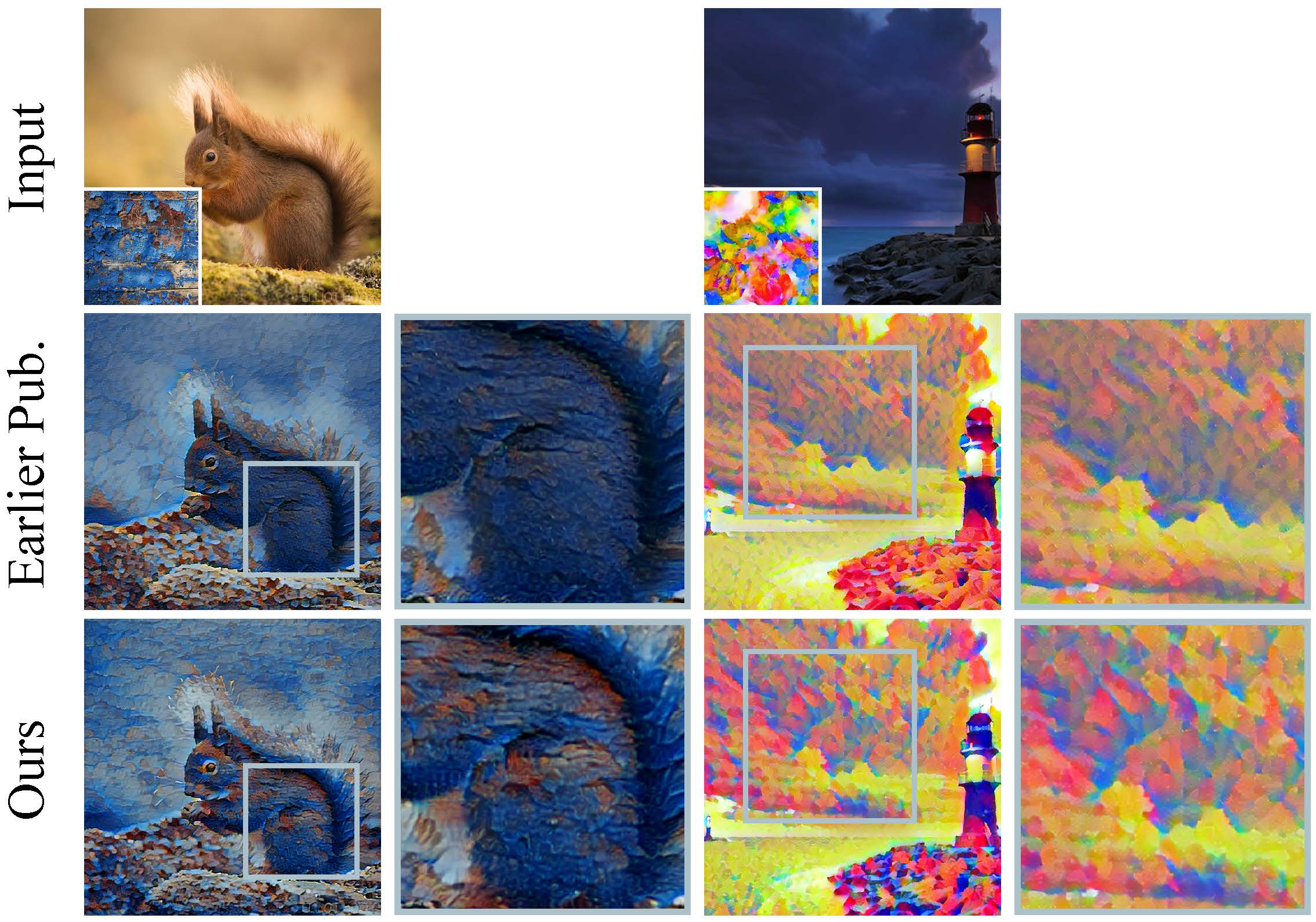

Figure 5. Qualitative comparison against our earlier publication [2]. With the proposed relaxed style loss, the color becomes richer, the details are clearer, and the texture of strokes is closer to the style reference. This demonstrates the effectiveness of our new style loss relaxation scheme.

Related Project

Consistent Video Style Transfer via Compound Regularization

More

Our project is used in two songs of the amazing Sound Escapes online concert.

Reference

[1] M. Ruder, A. Dosovitskiy, and T. Brox, “Artistic style transfer for videos,” in Proc. German Conference on Pattern Recognition, 2016, pp. 26–36.

[2] W. Wang, J. Xu, L. Zhang, Y. Wang, and J. Liu, “Consistent video style transfer via compound regularization,” in Proc. AAAI Conference on Artificial Intelligence, 2020, pp. 12 233–12 240.