GLADNet: Low-Light Enhancement Network with Global Awareness

FG, 2018 | PDF

* indicates equal contributions

Abstract

We address the problem of low-light enhancement. Our key idea is to first calculate a global illumination estimation for the low-light input, then adjust the illumination under the guidance of the estimation and supplement the details using a concatenation with the original input. Considering that, we propose a GLobal illumination-Aware and Detail-preserving Network (GLADNet). The input image is rescaled to a certain size and then put into an encoder-decoder network to generate global priori knowledge of the illumination. Based on the global prior and the original input image, a convolutional network is employed for detail reconstruction. For training GLADNet, we use a synthetic dataset generated from RAW images. Extensive experiments demonstrate the superiority of our method over other com- pared methods on the real low-light images captured in various conditions.

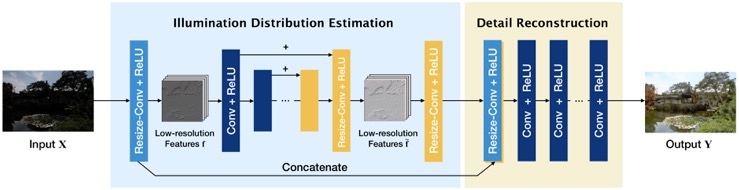

Network Architecture

The architecture of GLADNet. The architecture consists of two steps, global illumination estimation step and detail reconstruction step. In the first step, the encoder-decoder network produces an illumination estimation of a fixed size (96 × 96 here). In the second step, a convolutional network utilizes the input image and the outputs from the previous step to compensate the details.

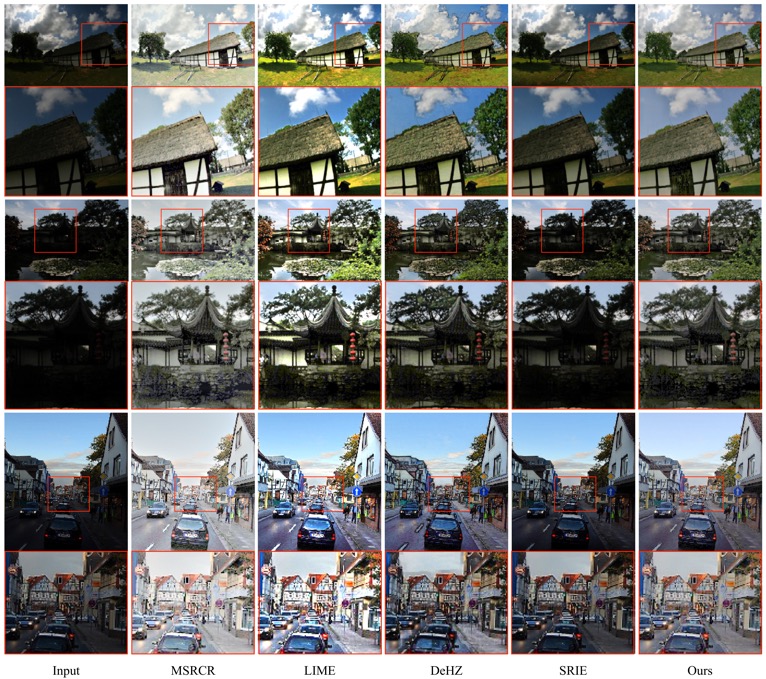

Experimental Results

The proposed method is implemented by Tensorflow on NVIDIA GeForce GTX 1080. We compare the proposed method with MSRCR[1], LIME[2], DeHZ[3], and SRIE[4] and evaluate the results on public LIME-data[2], DICM[6], and MEF[7] datasets.

Subjective evaluation:

Objective evaluation:

We use the Naturalness Image Quality Evaluator (NIQE) [5] no-reference image quality score for quantitative comparison. NIQE compares images to a default model computed from images of natural scenes. A smaller score indicates better perceptual quality. As shown in the table, our method outperforms other state-of-the-art methods on average.

Dataset

DICM

NPE

MEF

Average

MSRCR

3.117

3.369

4.362

3.586

LIME

3.243

3.649

4.745

3.885

DeHZ

3.608

4.258

5.071

4.338

SRIE

2.975

3.127

4.042

3.381

GLADNet

2.761

3.278

3.468

3.184

Running time:

For MSRCR, LIME, DeHZ, and SRIE, we used the MATLAB code provided by Zhenqiang Ying on lowlight. For these and GLADNet CPU version, we use Intel Core i5 at 2.7GHz. For GLADNet GPU version, we use Intel Core i7-6850K at 3.60GHz and NVIDIA GeForce GTX 1080. We calculated the average running time per image to enhance the MEF dataset.

MSRCR

2.358s

LIME

0.105s

DeHZ

0.282s

SRIE

8.019s

GLADNet(cpu)

6.099s

GLADNet(gpu)

0.278s

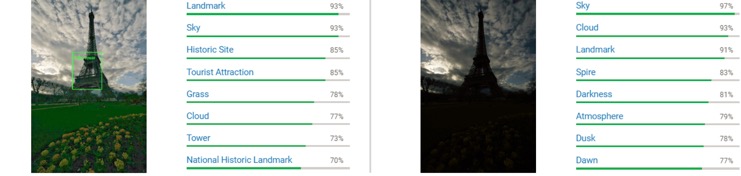

Applications on Computer Vision:

We test several real low-light images and their corresponding enhanced results on Google Cloud Vision API. GLADNet helps Google Cloud Vision API identify the objects in this image.

Results of Google Cloud Vision API for “Eiffel Tower” from MEF dataset. Before enhancement, Google Cloud Vision can not recognize the Eiffel Tower. After enhanced by GLADNet, the Eiffel Tower is identified and marked by a green box.

Results for “Room” from LIME-data dataset. Potted plant and painting in the non-enhanced version are not identified by Google Cloud Vision.

Download Link

Trained model: Github

Training Data: Google Drive, Baidu Pan (Code: ti6k)

Reference

[1] Z. Jobson, Daniel Jand Rahman and G. A. Woodell, “A multiscale retinex for bridging the gap between color images and the human observation of scenes,” IEEE Transactions on Image Processing, vol. 6, no. 7, pp. 965–76, 1997.

[2] X. Guo, Y. Li, and H. Ling, “Lime: Low-light image enhance- ment via illumination map estimation,” IEEE Transactions on Image Processing, vol. 26, no. 2, pp. 982–993, 2017.

[3] X. Dong, Y. Pang, and J. Wen, “Fast efficient algorithm for enhancement of low lighting video,” IEEE International Conference on Multimedia and Expo, pp. 1–6, 2011.

[4] X. Fu, D. Zeng, Y. Huang, X. P. Zhang, and X. Ding, “A weighted variational model for simultaneous reflectance and illumination estimation,” Computer Vision and Pattern Recognition, pp. 2782–2790, 2016.

[5] A. Mittal, R. Soundararajan, and A. C. Bovik, “Making a ‘completely blind’ image quality analyzer,” IEEE Signal Processing Letters, vol. 20, no. 3, pp. 209–212, 2013.

[6] C. Lee, C. Lee, and C. S. Kim, “Contrast enhancement based on layered difference representation,” pp. 965–968, 2013.

[7] K. Ma, K. Zeng, and Z. Wang, “Perceptual quality assessment for multi-exposure image fusion,” IEEE Transactions on Image Processing A Publication of the IEEE Signal Processing Society, vol. 24, no. 11, p. 3345, 2015.